How the process for data science projects works

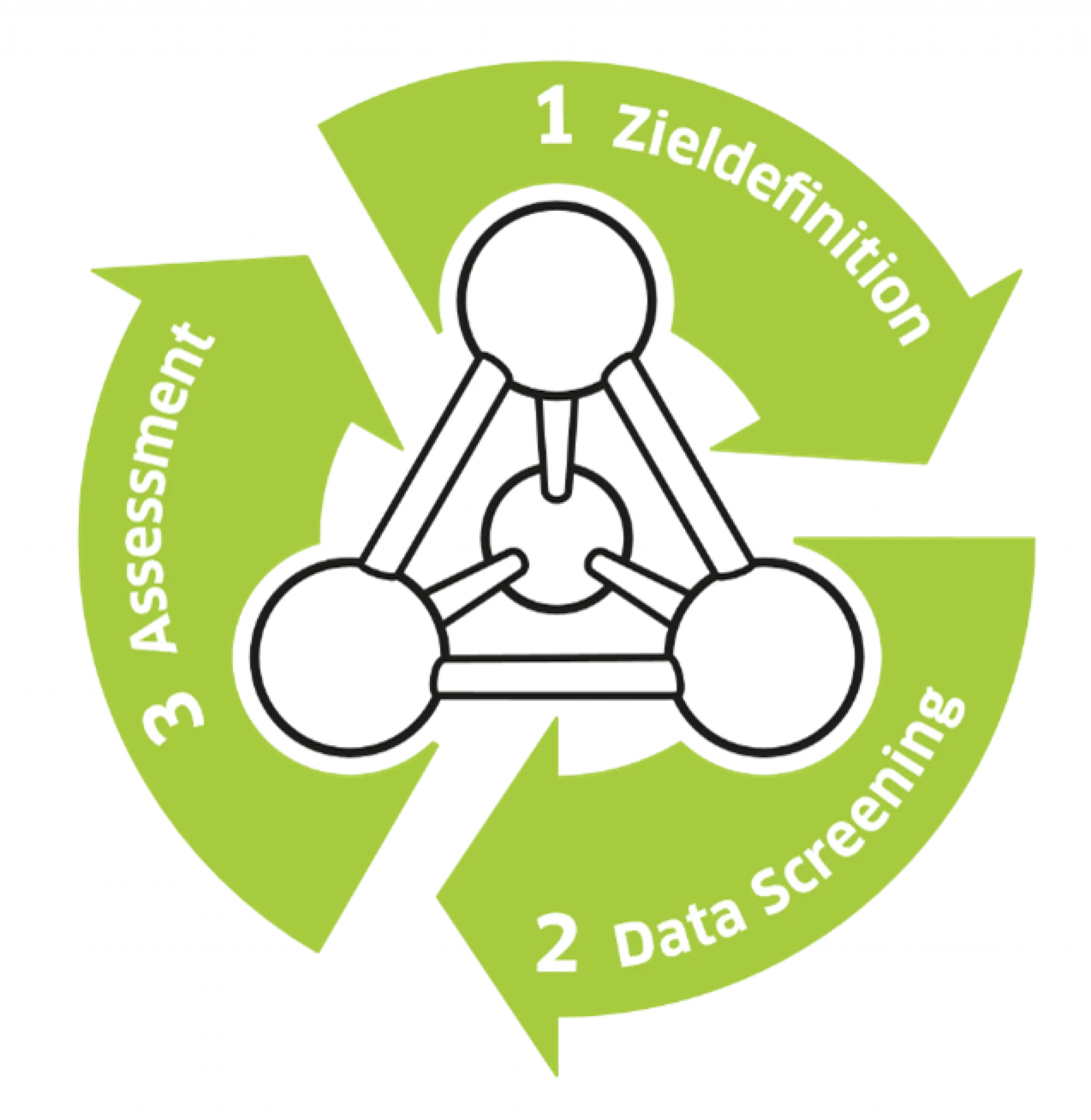

The Data Science Framework (DSF) from ControlTech Engineering AG supports companies in analyzing their data. In the standardized three-step process, everything revolves around the right target definition, data screening and data assessment. This navigation system will help you achieve your goals.

The topic of data science is on everyone's lips. ControlTech Engineering AG (CTE) from Liestal is also positioning itself in this area. Stefan Kramberg gave an update on our work at the Pharma Forum on April 25, 2024. The specialist presentation on the topic of the data science framework already addressed the topic of collecting and analyzing data last year. However, the importance of contextualization was demonstrated by this year's continuation: "We had the theoretical model, but not enough data. The data quality was also inadequate. That was a source of frustration. We really wanted to show practical results this year," explains data specialist and project manager Stefan Kramberg.

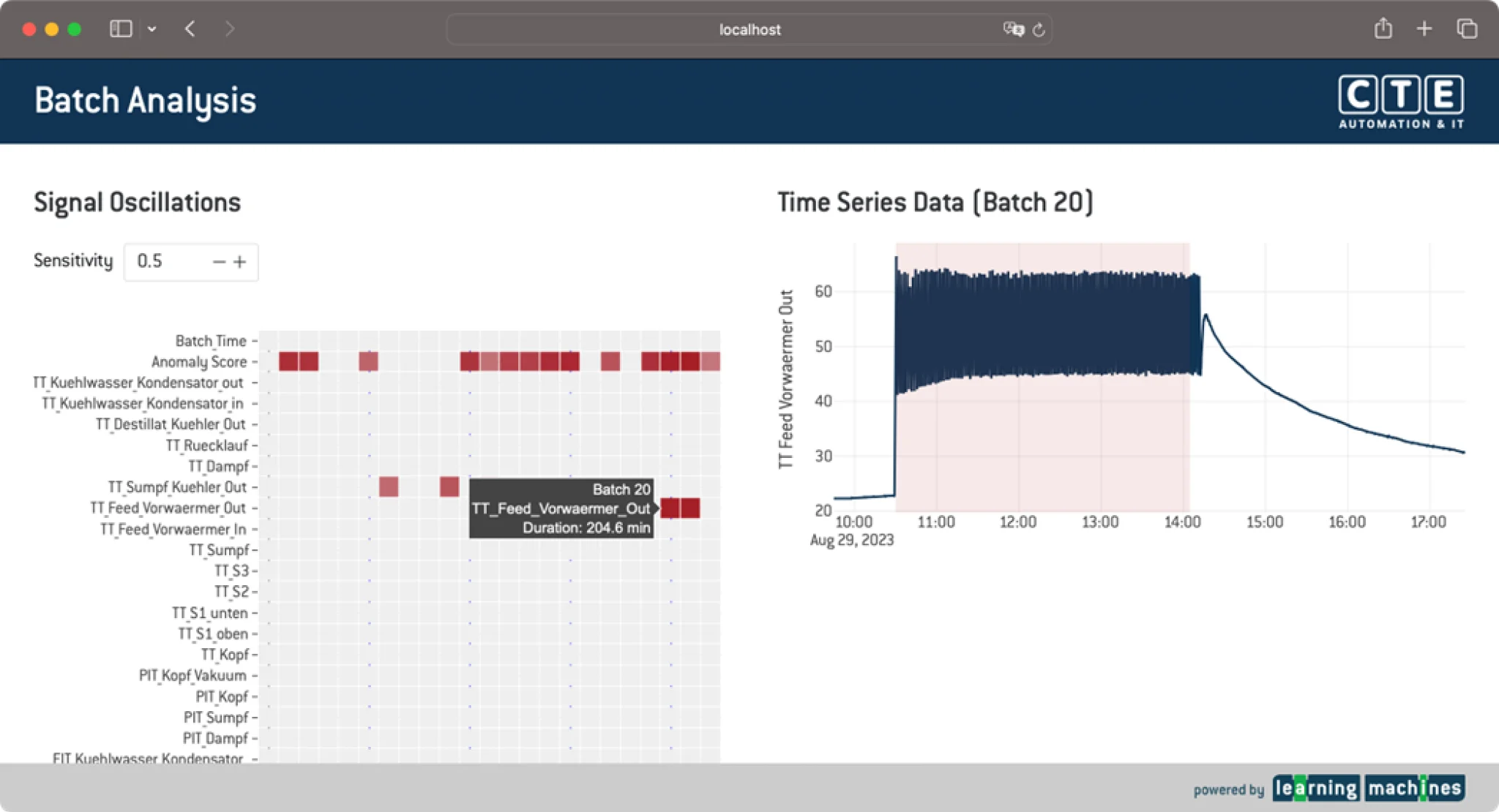

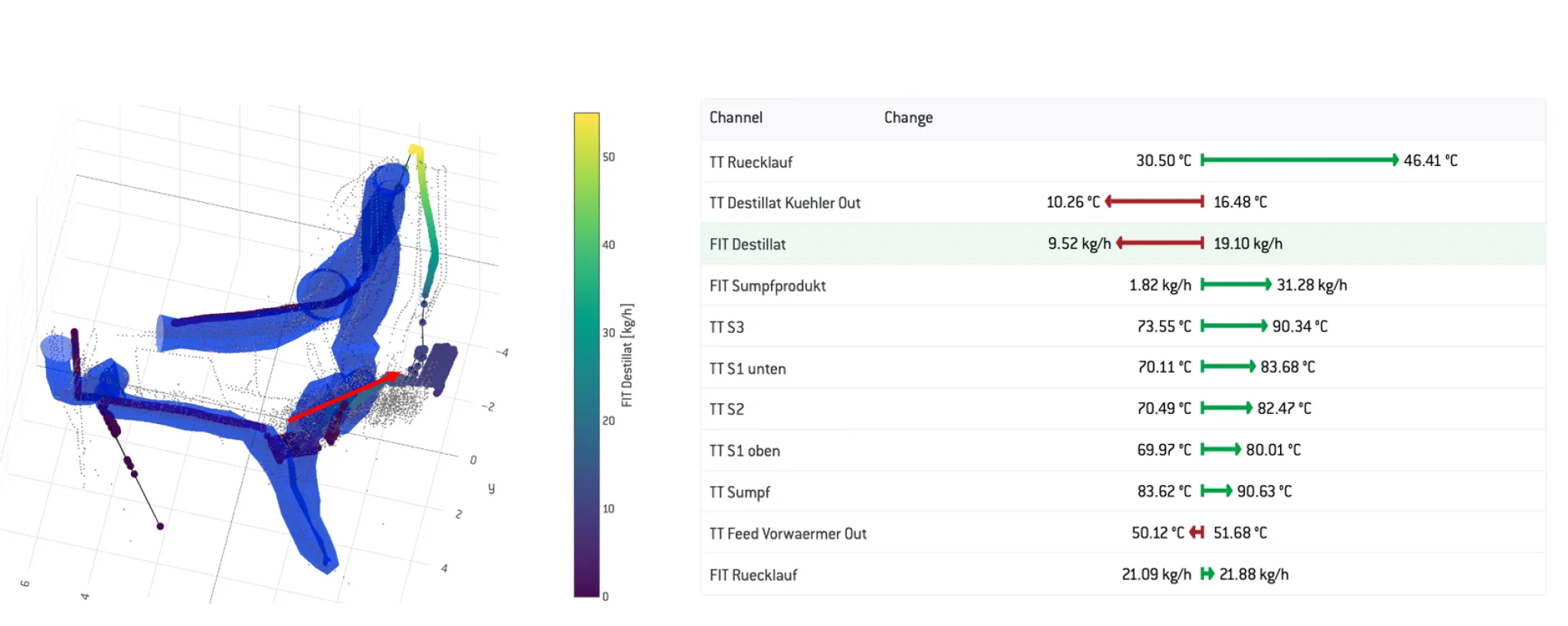

Then, at the beginning of the year, came the good news: "We've done it! The data analysis is helping us to optimize the manufacturing process," said Stefan Kramberg happily. The pilot project at the Institute of Chemistry and Bioanalytics at the FHNW in Muttenz focused on a production plant that did not produce for the market. However, it is in no way inferior to a production plant in terms of size or system architecture. It could therefore be used flexibly for our test. The PCS neo control system from Siemens was used as the basis, and the data was collected and structured using the AVEVA PI system.

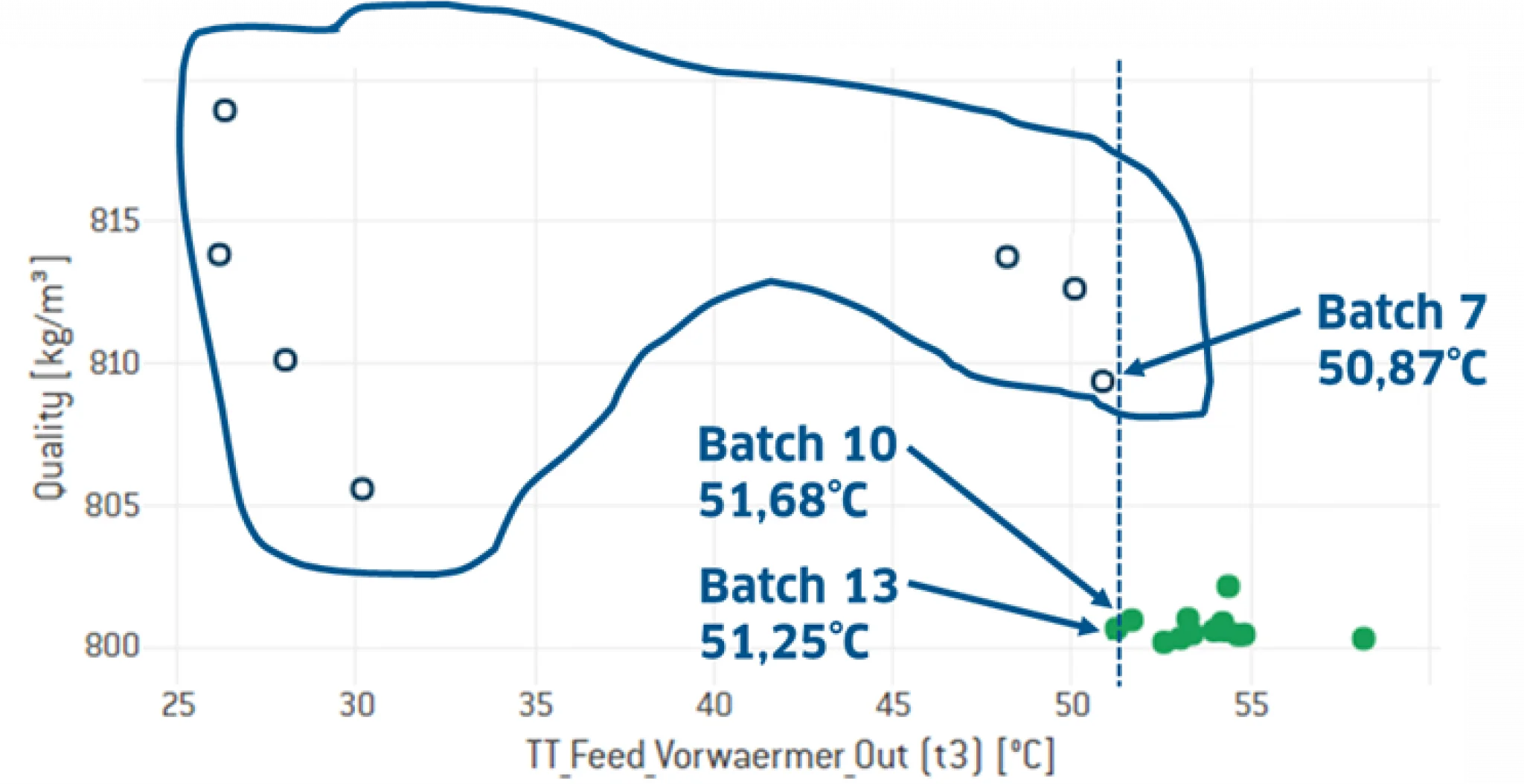

The objective was clear: the ethanol-water mixture was to be separated into ethanol and water, with ethanol being the product and water the waste product. The brief for the pilot project for the FHNW was for ControlTech Engineering (CTE) to achieve a cost reduction by reducing consumption of steam, coolant and nitrogen. The aim was also to increase quality. To this end, product purity was measured via the density of the distillate.

How the data science framework works in the use case:

Target definition: Separate ethanol and water

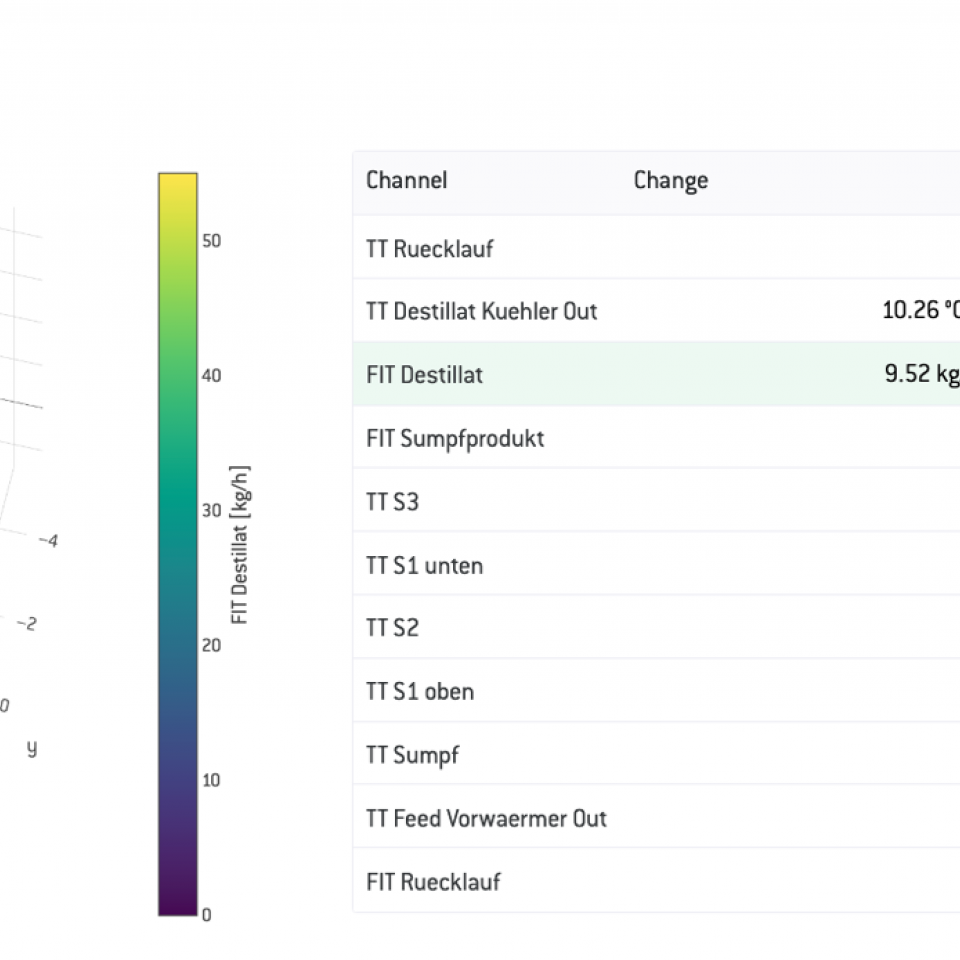

Data Screening: Process data for contextualization implemented in AVEVA PI AF

Assessment: too few batches / data, further batches available from Q3 2023